Introduction

Many of the advancements in satellite imagery and data analysis have gone towards automating data analysis and improving the world’s agriculture. At the heart of this analysis, though, lies a problem often neglected in conversations: how do farmers and data analysts work together to generate the insights needed to improve crop yield?

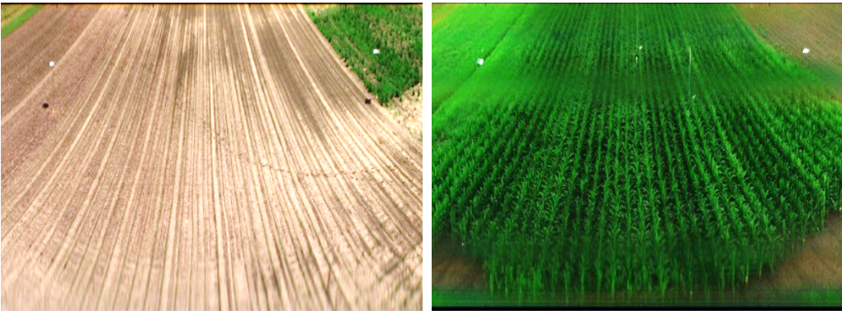

To illustrate the issue, consider the three images illustrated above. Each image is taken of the same field, from roughly the same vantage point, and roughly the same camera angle, zoom, and perspective. Over time, the field has been sowed and crops have begun to grow. As time continues to progress, the vegetation thrives, lives its life cycle, then begins to die.

In an ideal situation, the spatio- and spectrotemporal data captured by the camera could be stitched together since the camera would have never changed positions or rotated over time. But this case is far from ideal; the camera was placed on a stand every morning, the picture was taken, and the camera was removed. This means each time an image was taken, the attitude of the camera was different, also changing the zoom, lighting, and aperture settings. So how would an imaging processing expert go about stacking the images?

Stack it like its hot

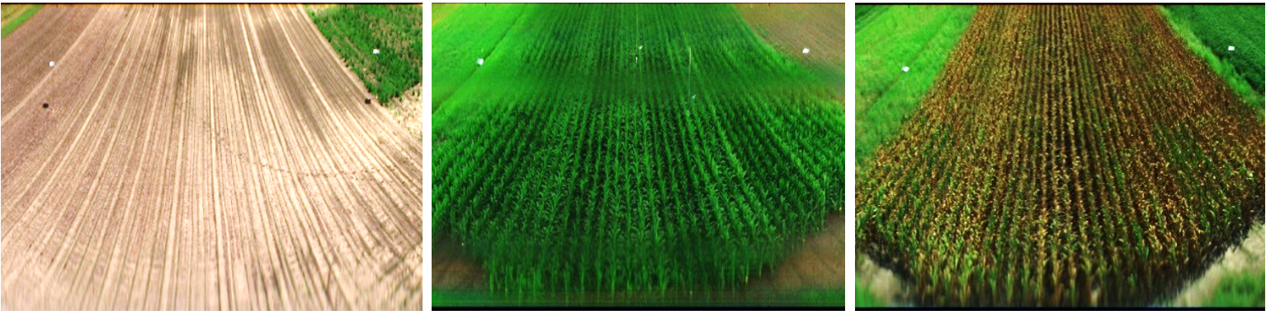

The key to stacking images that shift and move over time lies in identifying elements within the scene that are most consistent. Although the dataset used for this study was not perfect, the most consistently present element was found to be the fence posts used to divide the crop fields, more specifically the white panels they held on top.

Using a method similar to that discussed in a previous article, each image captured by the camera can be split into its red, green, and blue (RGB) components. Furthermore, each of these components can be recombined algebraically to produced secondary images depicting specific materials within the environment based on their unique spectral signature. In this case, white is fairly easy to isolate from the browns and greens common to most crop fields since it is defined as the color in which all channels have reached their maximum value. A priori sign identification may begin by visually identifying regions as shown below.

After identifying all white pixels in the scene, clustering techniques can then be used to separate the two clusters, indicating the two separate white plates shown in each image. Once the centers of the white plates are identified and indexed, it is straight forward to find the outlines of the white signs via edge filters like Canny. Once the sign locations and outlines are matched in one image, subsequent images can be lined up and scaled to match with the first image.

But why is it important to line up each image based on spectral signatures? The short answer is that building a robust algorithm to extract color X and correlate pixels across time is a useful tool across many applications in image processing. In the case of crop analysis, spatially and spectrally aligned images enable a farmer to determine rough crop height and identify unexpected problems in the crop well before specific plants show signs of dying. In turn, if a farmer knows a specific plant is dying, they can identify the epicenter of an issue that could potentially spread throughout an entire crop.

Robust spectral indexing

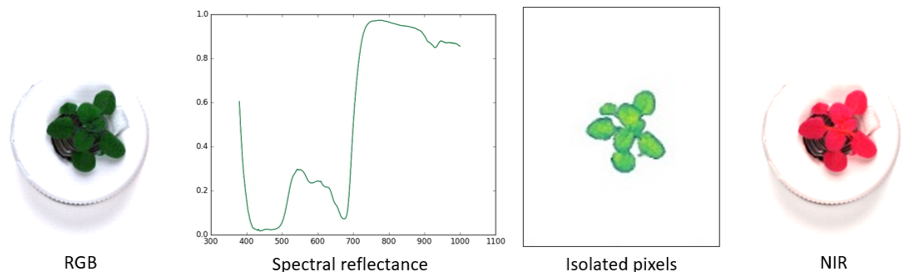

Of course, there are many more applications where per-pixel identification of plant health becomes significant. Zooming in on the previous situation, imagine that a scientist is studying the effects of an external chemical agent on a plant. Using a very similar algorithm as the one previously discussed, the researcher could create high-fidelity time lapse data of a single plant, grown from a seedling. For the sake of argument, it could even be said the seedling comes from the field nearby the crops they were just examining.

As illustrated above, separation of the RGB color channels for a small plant can be done just as easily as for images from a large field. Since the single plant is much larger (relatively) in the new image, individual pixels become representative not of individual plants, but rather individual sections of each leaf, stem, etc. This is significant, because if the researcher exposes the plant to a newly developed chemical agent, they will be able to tell which regions of the plant are effected by the agent first, and which sections are relatively unharmed.

Another analysis option that opens to the researcher is their ability to track the surface area of leaves over time. In the “Isolated pixels” image in the above illustration, a Canny edge filter (or clustering algorithm) could again be used to isolate individual leaves. Knowledge about the camera system’s geometry then enables the researcher to calculate a per-leaf surface area, and track per-leaf growth over time. This is exactly the type of analysis farmers may find interesting if new fertilizers, watering conditions, etc. are being tested in a laboratory setting before widely applying the new technique to an entire field of crops.

Conclusion

The ability to stack and align multiple images of the same object has a wide variety of applications. From long-term crop analysis to 3d photo sphere stitching to 3D CAD generation, high-caliber image alignment remains a key component of modern image processing. Although the environments and applications discussed herein are strictly centered around agriculture, the image set could easily be swapped out for a completely different scenario, and a similar analysis could be done with minimal changes to the core algorithm.